[ad_1]

A new computational approach, built on data-driven techniques, is making it possible to turn simple 2D sketch into a realistic 3D shape, with little or no user input necessary.

A global team of computer scientists from University of Hong Kong, Microsoft and University of British Columbia, have developed a novel framework, combining CNNs, or convolutional neural network techniques, with geometric inference methods to compute sketches and their corresponding 3D shapes, faster and more intuitively than existing methods.

“Our tool can be used by artists to quickly and easily draft freeform shapes that can serve as base shapes and be further refined with advanced modeling software,” says Hao Pan, researcher at Microsoft Research Asia who led the work. “Novice users may also use it as an accessible tool to make stylish contents for their 3D creations and virtual worlds.”

The novel method builds on the team’s previous sketch-based 3D modeling work — BendSketch: Modeling Freeform Surfaces through 2D Sketching* — a purely geometric-based method which requires annotated sketch inputs. Adds Pan, “We want to develop a method that is both intelligent — in the sense of requiring as few user inputs as possible and doing all needed inference automatically, and generic — so that we do not have to train a new machine learning model for each specific object category.”

Pan’s collaborators Changjian Li and Wenping Wang at University of Hong Kong; Yang Liu and Xin Tong at Microsoft Research Asia; and Alla Sheffer at University of British Columbia, will present at SIGGRAPH Asia 2018 in Tokyo 4 December to 7 December. The annual conference features the most respected technical and creative members in the field of computer graphics and interactive techniques, and showcases leading edge research in science, art, gaming and animation, among other sectors.

It is a challenge to easily, automatically transform a sparse, imperfect 2D sketch to a robust 3D shape. The key challenge the researchers addressed in this work is the significant information disparity presented between the stages of ‘sketch’ and ‘3D’ model. This is where the artist would fill in features such as bumps, bends, creases, valleys, ridges on the surface drawings to create a 3D design. For each sketch, demonstrated in their paper, the method uses a CNN to predict the 3D surface patch.

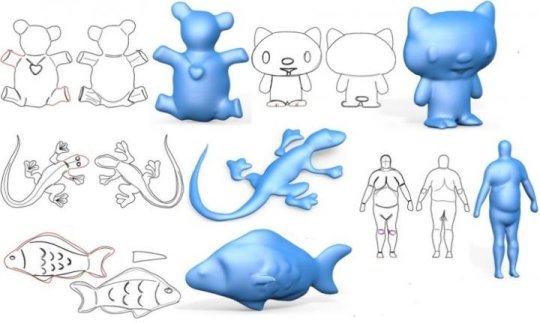

The researchers’ CNN is trained on a large dataset generated by rendering sketches of various 3D shapes using non-photorealistic line rendering, or NPR, method that automatically mimics human sketching of freeform shapes — shapes, including that of a teddy bear, a bird or a fish, for example. The researchers validated their new approach, compared it with existing methods and evaluated its performance. Results demonstrated that their method provides a new way to produce 3D modeling from 2D sketches in a user-friendly and more robust manner.

One example, included in their paper, involved novice users who were tested on the new method. The users have little knowledge or training about sketching or 3D modeling. They were given a 20-minutes training demo on the new method. They then were asked to create three target shapes — bird, teddy bear and dolphin — of varying complexity. The users managed to complete the tasks, ranging from just 5 minutes to 20 minutes total. As noted in the paper, the novice participants found the sketches easy to produce using the researchers’ tool, and some also wanted to explore with it more.

In future work, the researchers intend to explore several possibilities and extensions of their algorithm. For example, currently their method models a surface patch each time by sketching in view, but it would be beneficial to be able to model a complete shape by sketching both front and back patches in a single view. They also hope to enhance the tool so that he can handle multiple scale modeling, so that large-scale shapes and fine-level details can be sketched with ease.

Video: https://www.youtube.com/watch?v=Fz-f-e51zRQ

[ad_2]