[ad_1]

Through innovative use of a neural network that mimics image processing by the human brain, a research team reports accurate reconstruction of images transmitted over optical fibers for distances of up to a kilometer.

In The Optical Society’s journal for high-impact research, Optica, the researchers report teaching a type of machine learning algorithm known as a deep neural network to recognize images of numbers from the pattern of speckles they create when transmitted to the far end of a fiber. The work could improve endoscopic imaging for medical diagnosis, boost the amount of information carried over fiber-optic telecommunication networks, or increase the optical power delivered by fibers.

“We use modern deep neural network architectures to retrieve the input images from the scrambled output of the fiber,” said Demetri Psaltis, Swiss Federal Institute of Technology, Lausanne, who led the research in collaboration with colleague Christophe Moser. “We demonstrate that this is possible for fibers up to 1 kilometer long” he added, calling the work “an important milestone.”

Deciphering the blur

Optical fibers transmit information with light. Multimode fibers have much greater information-carrying capacity than single-mode fibers. Their many channels — known as spatial modes because they have different spatial shapes — can transmit different streams of information simultaneously.

While multimode fibers are well suited for carrying light-based signals, transmitting images is problematic. Light from the image travels through all of the channels and what comes out the other end is a pattern of speckles that the human eye cannot decode.

To tackle this problem, Psaltis and his team turned to a deep neural network, a type of machine learning algorithm that functions much the way the brain does. Deep neural networks can give computers the ability to identify objects in photographs and help improve speech recognition systems. Input is processed through several layers of artificial neurons, each of which performs a small calculation and passes the result on to the next layer. The machine learns to identify the input by recognizing the patterns of output associated with it.

“If we think about the origin of neural networks, which is our very own brain, the process is simple,” explains Eirini Kakkava, a doctoral student working on the project. “When a person stares at an object, neurons in the brain are activated, indicating recognition of a familiar object. Our brain can do this because it gets trained throughout our life with images or signals of the same category of objects, which changes the strength of the connections between the neurons.” To train an artificial neural network, researchers follow essentially the same process, teaching the network to recognize certain images (in this case, handwritten digits) until it is able to recognize images in the same category as the training images that it has not seen before.

Learning by the numbers

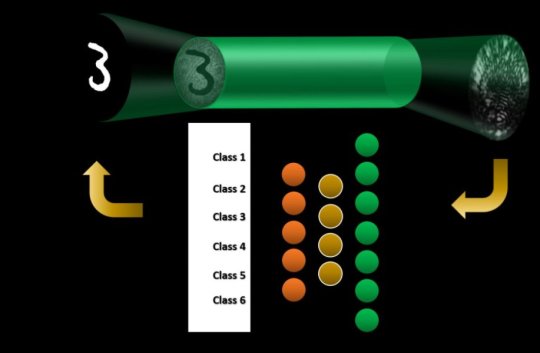

To train their system, the researchers turned to a database containing 20,000 samples of handwritten numbers, 0 through 9. They selected 16,000 to be used as training data, and kept aside 2,000 to validate the training and another 2,000 for testing the validated system. They used a laser to illuminate each digit and sent the light beam through an optical fiber, which had approximately 4,500 channels, to a camera on the far end. A computer measured how the intensity of the output light varied across the captured image, and they collected a series of examples for each digit.

Although the speckle patterns collected for each digit looked the same to the human eye, the neural network was able to discern differences and recognize patterns of intensity associated with each digit. Testing with the set-aside images showed that the algorithm achieved 97.6 percent accuracy for images transmitted through a 0.1 meter long fiber and 90 percent accuracy with a 1 kilometer length of fiber.

A simpler method

Navid Borhani, a research-team member, says this machine learning approach is much simpler than other methods to reconstruct images passed through optical fibers, which require making a holographic measurement of the output. The neural network was also able to cope with distortions caused by environmental disturbances to the fiber such as temperature fluctuations or movements caused by air currents that can add noise to the image — a situation that gets worse with fiber length.

“The remarkable ability of deep neural networks to retrieve information transmitted through multimode fibers is expected to benefit medical procedures like endoscopy and communications applications,” Psaltis said. Telecommunication signals often have to travel through many kilometers of fiber and can suffer distortions, which this method could correct. Doctors could use ultrathin fiber probes to collect images of the tracts and arteries inside the human body without needing complex holographic recorders or worrying about movement. “Slight movements because of breathing or circulation can distort the images transmitted through a multimode fiber,” Psaltis said. The deep neural networks are a promising solution for dealing with that noise.

Psaltis and his team plan to try the technique with biological samples, to see if that works as well as reading handwritten numbers. They hope to conduct a series of studies using different categories of images to explore the possibilities and limits of their technique.

Story Source:

Materials provided by The Optical Society. Note: Content may be edited for style and length.

[ad_2]