[ad_1]

Advances in computer-generated imagery have brought vivid, realistic animations to life, but the sounds associated with what we see simulated on screen, such as two objects colliding, are often recordings. Now researchers at Stanford University have developed a system that automatically renders accurate sounds for a wide variety of animated phenomena.

“There’s been a Holy Grail in computing of being able to simulate reality for humans. We can animate scenes and render them visually with physics and computer graphics, but, as for sounds, they are usually made up,” said Doug James, professor of computer science at Stanford University. “Currently there exists no way to generate realistic synchronized sounds for complex animated content, such as splashing water or colliding objects, automatically. This fills that void.”

The researchers will present their work on this sound synthesis system as part of ACM SIGGRAPH 2018, the leading conference on computer graphics and interactive techniques. In addition to enlivening movies and virtual reality worlds, this system could also help engineering companies prototype how products would sound before being physically produced, and hopefully encourage designs that are quieter and less irritating, the researchers said.

“I’ve spent years trying to solve partial differential equations — which govern how sound propagates — by hand,” said Jui-Hsien Wang, a graduate student in James’ lab and in the Institute for Computational and Mathematical Engineering (ICME), and lead author of the paper. “This is actually a place where you don’t just solve the equation but you can actually hear it once you’ve done it. That’s really exciting to me and it’s fun.”

Predicting sound

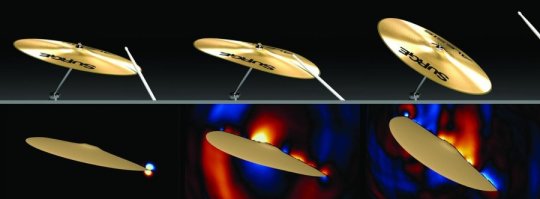

Informed by geometry and physical motion, the system figures out the vibrations of each object and how, like a loudspeaker, those vibrations excite sound waves. It computes the pressure waves cast off by rapidly moving and vibrating surfaces but does not replicate room acoustics. So, although it does not recreate the echoes in a grand cathedral, it can resolve detailed sounds from scenarios like a crashing cymbal, an upside-down bowl spinning to a stop, a glass filling up with water or a virtual character talking into a megaphone.

Most sounds associated with animations rely on pre-recorded clips, which require vast manual effort to synchronize with the action on-screen. These clips are also restricted to noises that exist — they can’t predict anything new. Other systems that produce and predict sounds as accurate as those of James and his team work only in special cases, or assume the geometry doesn’t deform very much. They also require a long pre-computation phase for each separate object.

“Ours is essentially just a render button with minimal pre-processing that treats all objects together in one acoustic wave simulation,” said Ante Qu, a graduate student in James’ lab and co-author of the paper.

The simulated sound that results from this method is highly detailed. It takes into account the sound waves produced by each object in an animation but also predicts how those waves bend, bounce or deaden based on their interactions with other objects and sound waves in the scene.

Challenges ahead

In its current form, the group’s process takes a while to create the finished product. But, now that they have proven this technique’s potential, they can focus on performance optimizations, such as implementing their method on parallel GPU hardware, that should make it drastically faster.

And, even in its current state, the results are worth the wait.

“The first water sounds we generated with the system were among the best ones we had simulated — and water is a huge challenge in computer-generated sound,” said James. “We thought we might get a little improvement, but it is dramatically better than previous approaches even right out of the box. It was really striking.”

Although the group’s work has faithfully rendered sounds of various objects spinning, falling and banging into each other, more complex objects and interactions — like the reverberating tones of a Stradivarius violin — remain difficult to model realistically. That, the group said, will have to wait for a future solution.

Story Source:

Materials provided by Stanford University. Original written by Taylor Kubota. Note: Content may be edited for style and length.

[ad_2]