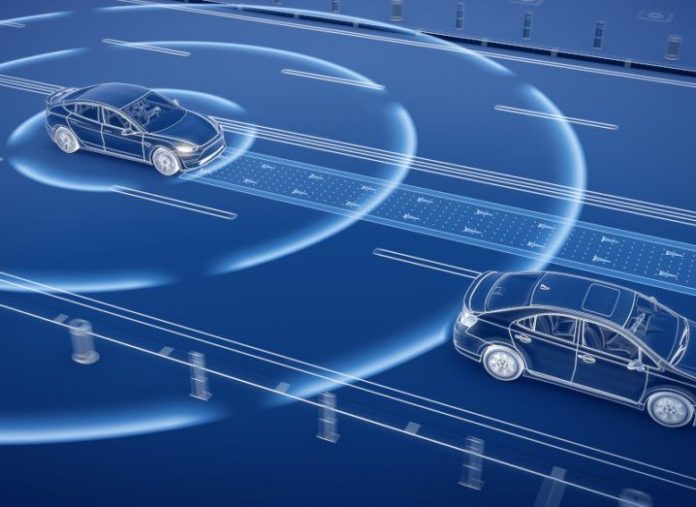

Autonomous vehicles that are capable of driving themselves will be tasked in the future with making decisions about the safety of their passengers, other people in vehicles, and pedestrians. Jean-François Bonnefon with the Toulouse School of Economics in Toulouse, France and colleagues are the first to examine human desires as applied to the decisions that driver-less vehicles will be making in the near future.

The majority of manufacturers and government review organizations predict that a totally autonomous vehicle culture would reduce vehicle accidents of all kinds by at least 90 percent. The aim of the study was to determine people’s preferences in who lives and who is injured or may die in autonomous vehicle accidents. The majority of respondents to numerous scenarios involving accidents with autonomous vehicles indicate that people prefer safety first for themselves and their families but opt for totally utilitarian vehicles for others.

This is the first instance where human moral decisions will be forced to become a part of the artificial intelligence that operates a machine. A totally utilitarian vehicle would opt for killing its own passengers rather than killing a larger number of other people. A totally self-protective device would place a higher value on its passengers than on others. Science and technology have reached the age of Assimov’s “Three laws of Robotics”.

The analysis s has implications for manufacturers. The manufacturers can be held legally liable for the decision as to the type of morality its vehicles are programmed for. The willingness to purchase or use a specific autonomous vehicle will be impacted by human moral preferences. One has to wonder if beings that are not absolutely moral (humans) are capable of designing a machine that is.